Here’s the problem: web video is beginning to rival television, but there isn’t a good open resource for subtitling. Here’s our mission: we’re trying to make captioning, subtitling, and translating video publicly accessible in a way that’s free and open, just like the Web.

The SpokenMedia project was born out of the research into automatic lecture transcription from the Spoken Language Systems group at MIT. Our approach has been two fold. We have been focusing on working with researchers to improve the automatic creation of transcripts–to enable search, and perhaps accessible captions. We’ve been working hard with researchers and doing what we can do from a process standpoint to improve accuracy. We have also been working on tools to address accuracy from a human editing perspective. In this approach we would provide these tools to lecture video publishers, but have considered setup a process to enable crowdsourced editing.

Recently we learned of a new project, Universal Subtitles (now Amara) and their Mozilla design challenge for Collaborative Subtitles. Both (?) projects/approaches are interesting and we’ll be keeping our eye on their progress. (Similarly with UToronto’s OpenCaps project that’s part of the Opencast Matterhorn suite).

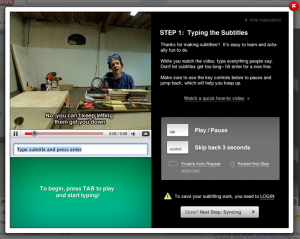

Here’s a screenshot from the Universal Subtitle project.

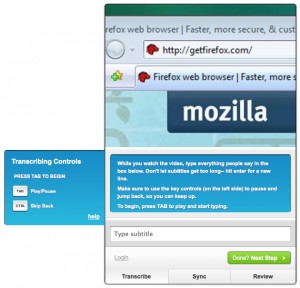

Here’s a screenshot of the caption widget from the Collaborative Subtitling project.