MIT’s OpenCourseWare uses MIT’s Google Search Appliance (GSA) to search its content. MIT supports customization of GSA results through XSL transformation. This post describes how we plan to use GSA to search lecture transcripts and return results containing the lecture videos that the search terms appear in. Since OCW publishes static content, it doesn’t incorporate an integral search engine. Search is provided through Continue Reading

Running the Baseline Recognizer

The software that processes lecture audio into a textual transcript is comprised of a series of scripts that marshall input files and parameters to a speech recognition engine. Interestingly, since the engine is data driven, its code seldom changes; improvements in performance and accuracy are achieved by refining the data it uses to perform its tasks.

There are two steps to produce the transcript. The first creates an audio file in the correct format for speech recognition. The second processes that audio file into the transcript.

SpokenMedia Transcript Editor

We’re working on a javascript-based transcript editor with our developer Ryan Lee at voccs.com.

The goals of the editor project are:

- Low and high accuracy editors–We believe the best approach to transcript editing involves separating the editing into two distinct phases. In cases where the transcript is mostly accurate, we want to retain the time/word relationships. That is, for every word, we want to make sure we retain the timecode associated with that word. In cases where the transcript is mostly inaccurate, we believe it’s best to just edit the transcript as a single block of text. And that we’ll take the edited transcript and align it to the audio after the transcript editing is completed. Unfortunately, this will require a time delay (best case is about 1:1.5) to reprocess the video.

- Be simple and intuitive to use.

- Be a clean design.

- Support the user with a limited amount of extra mousing and/or clicking (this is the one compelling reason for us to have the “low” and “high” accuracy editors).

- Integrate an audio/video player within the UI of the transcript editor (instead of running the video/audio as a separate application, or in a separate window, from the editor).

- An editing communication protocol to be implemented between the server and client browser.

We’ve seen some initial designs from Ryan, and once we have this design phase completed, we’ll post the editors with transcripts and go into a testing phase.

Extending the Spiral Connect Player

Christophe Battier, Jean Baptiste Nallet and Alexandre Louys from ICAP at the Universite de Lyon 1 in France visited the SpokenMedia team in February 2010.

They are working on a new version of their virtual learning environment (VLE) — a learning management system (LMS) in American-speak — that has an integrated video player with a number of features of interest to SpokenMedia.

The player is Flash-based and provides the ability for users to create “bubbles” — or annotations/bookmarks — that overlay the video. These bubbles can be seen along a timeline, and can be used to provide feedback from teacher to student or highlight interesting aspects of the video.

Here’s a screenshot from the current version of the Spiral player:

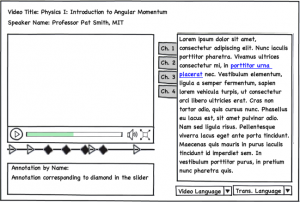

We discussed with them, integrating the aspects of the transcript display in the player we’ve been developing.

The user can watch the video and see the transcript with a “bouncing ball” highlighting the phrase being said. The user can switch between transcripts in multiple languages. And, the user can search through the transcript and playback the video by double clicking on the search result.

We talked about how the SpiralConnect team might extend their player to integrate transcripts and also create annotations that could be displayed below (or to the side of the video) and not just overlay the video image.

Here’s a mockup of what we discussed.

SpokenMedia Player

We’ve developed a first pass of a new video player for SpokenMedia that integrates video playback and transcript display. (Well, ok we did this in late-December and initially demo’d this in January with IIHS.)

Our goals with the player development:

- Javascript-player

- Play multiple videos on the same page

- Highlight the phrase corresponding to relevant point in the video

- Be able to switch between multiple audio tracks (if they are available)

- Be able to switch between transcripts in multiple languages (if they are available)

- Be able to search through a transcript, and play the video by clicking on the search result

- Be able to open source the player

- Include the OEIT logo

We worked with a great developer, Ryan Lee at voccs.com, to develop the player.

We used the player as part of our demo for the Indian Institute for Human Settlements.